I’m trying to transfer a bunch of files between two Amazon S3 buckets and I’m not sure of the best way to do it. I’ve never copied files between buckets before, and I want to make sure I don’t accidentally lose any data. Can someone explain the steps or suggest the easiest method for this process?

How I Move Files Between S3 Buckets (Without Losing My Mind)

Alright, let me just spill the beans on moving files between two S3 buckets because—let’s be real—Amazon never makes it as easy as dragging a folder across your desktop. Below, I’ll walk you through this, step by step, with a side helping of real talk and not, like, that shiny doc-speak you always find on official sites.

1. Vanilla AWS CLI – Yes, The Terminal Is Still King

Let’s dive in. Honestly, the CLI gets the job done with a single command, if you don’t mind the Terminal. Here’s how I’d spell it out:

aws s3 sync s3://source-bucket/path/ s3://destination-bucket/path/

- This “sync” right here is your pal; it copies everything from that source path straight into your destination, preserving folders and all.

- Wanna move an entire bucket’s contents? Just trim those

/path/parts out. - If you don’t want to overwrite files in the destination (because, say, there’s that one weird file you tweaked already), toss in

--excludeor--dryrun. Example:

aws s3 sync s3://source s3://destination --exclude '*.tmp'

You’ll need to have AWS CLI installed, configured with access keys that can actually see those buckets (been burned by the ‘Access Denied’ message more times than I’d care to admit).

2. AWS Console – Point, Click, Pray

You ever tried to do big file moves using the console? You can select files, hit “Copy” at the top, pick another bucket, and paste. It works… for like, a handful of files. But if you have directories upon directories—it’s basically “good luck and check back in a couple of hours.” Godspeed if your browser crashes.

Pro tip: If you’re on a metered internet, steer clear; you’ll regret your life choices when you see those download speeds.

3. Enter Third-Party Apps – The Bridge

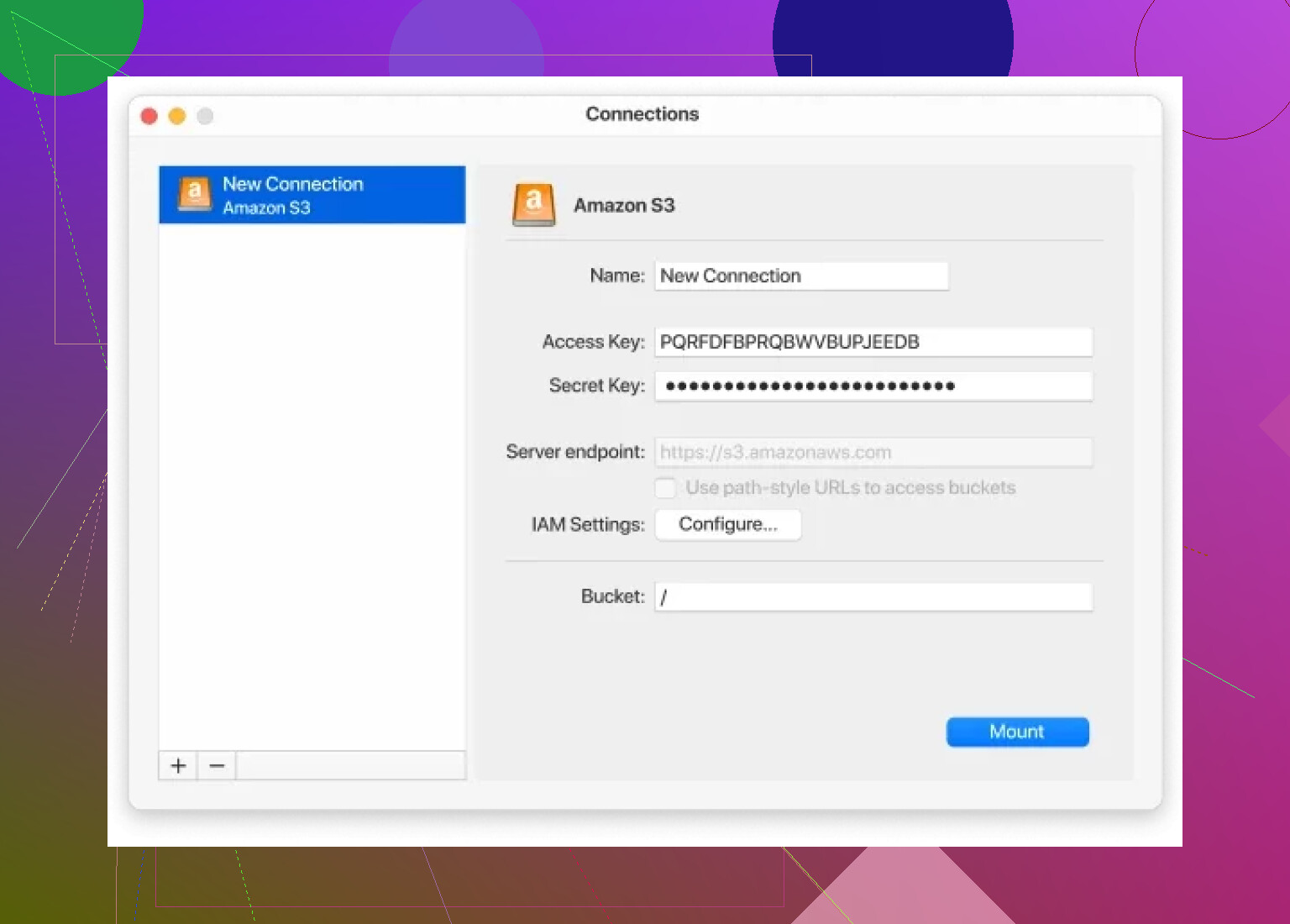

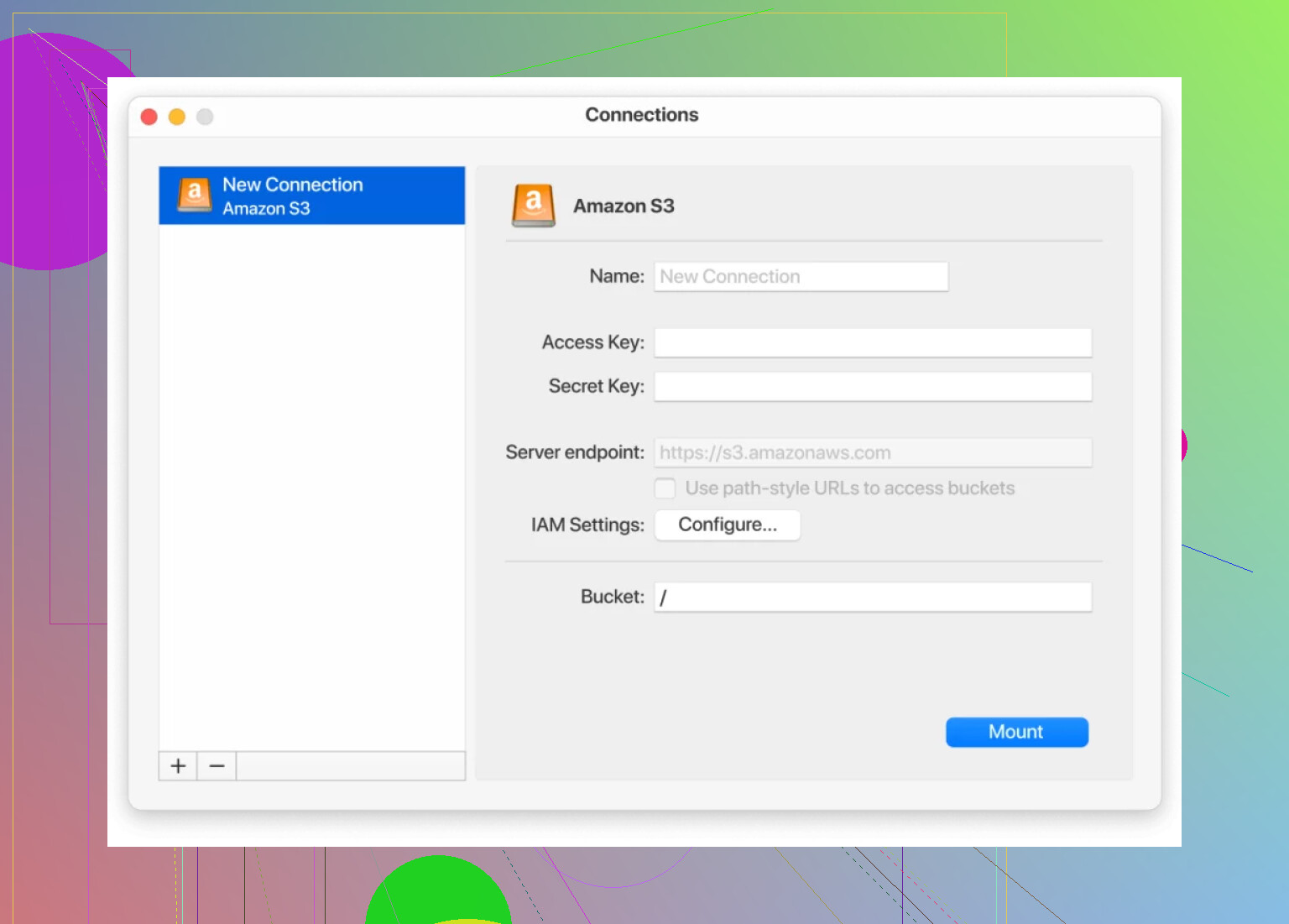

If you’d rather not wrangle with scripts or AWS’s interface, some tools just make the whole S3 juggling act way smoother. There’s an app—CloudMounter.—that basically turns your cloud storage into just another disk on your Mac. So, you can open your buckets straight from Finder. Drag-n-drop, Command+C, Command+V. That’s it.

No, it won’t launch your rocket to Mars, but if you’re copy-pasting daily, it’s a sanity saver.

TL;DR

- CLI = best for power users, automations, nerdy glory

- Console = okay-ish for quick moves, less so for bulk jobs

- Third-party apps (CloudMounter, etc) = most like using a regular folder; less error, more chill

Seriously, whatever you do, check the permissions first or nothing’s making it across, no matter the route.

Not gonna lie—sometimes I feel like Amazon’s secretly running a contest for “most confusing way to move some frickin’ files.” @mikeappsreviewer already hit the highlights on CLI and the console, but let’s look at this from a slightly different angle: what’s your pain threshold for this nonsense?

If you’re not married to AWS’s own tools, there’s a whole world of automation and backup-friendly stuff out there—think of it as your insurance against accidental data obliteration. I’m a fan of scripting, but my favorite “I-don’t-trust-myself-not-to-delete-everything” method is setting up a lifecycle rule to copy instead of move, then clean up after you’ve checked the files landed safely. Bonus: S3 supports versioning, so you can roll back if you botch something. If you’re mega-paranoid (like me after coffee #3), enable versioning on both buckets—you’ll always have the older versions to fall back to if you nuke something by accident.

And honestly, for anyone who wants a faster, visual approach, CloudMounter’s actually not snake oil. Makes S3 buckets look like mounted drives, and you can drag-and-drop like it’s 1998 and you’re moving folders on Windows 98 (nostalgia!). It’s not bulletproof if you need wild automations, but for casual volume jobs? Unglamorous but effective.

One thing tho, copying buckets with terabyte-sized objects? None of these GUI tools or even the CLI will be pain-free if your bandwidth’s trash. If you’re stuck in AWS’s universe, S3 Batch Operations is a thing. It’s like launching a mini-migration inside AWS—set your manifest, define the copy operation, and let AWS manage the retries. (This is ideal for the control freaks among us.)

TL;DR: Don’t trust a move operation until you verify the goods landed. Safest? Copy > validate > delete. Use CloudMounter (really, it’s idiotproof), try S3 batch ops for huge jobs, and FOR THE LOVE, test with a handful of dummy files first so you don’t have to explain to your boss why sales reports from 2019 are gone forever.

Honestly, the stress about “not losing any data” while moving S3 files is so real—I’ve lost sleep triple-checking myself. After reading what @mikeappsreviewer and @cacadordeestrelas said (love the drama and the paranoia, by the way), I’ll just add: if you’re skittish about command lines or third-party tools (and can’t be trusted not to nuke entire buckets—been there), AWS actually offers one underdog solution nobody ever seems to hype up: S3 replication.

Yeah, it’s not “move everything now,” but it’ll mirror new and existing objects from bucket A to bucket B automatically, and it’s built for data integrity. Turn on versioning in both buckets, set up a replication rule in the S3 console, and AWS does the heavy lifting, including redundancy across regions if you want. No download/upload, no overwriting unless you explicitly allow it, and absolutely zero terminal vibes. Bonus: S3 keeps a log of all replicated objects, which is chef’s kiss when tracking if files landed.

But, real talk: Replication is sloooow with large buckets, and doesn’t touch old files unless you explicitly retroactive it. For big jobs, I’ll sometimes mix strategies—start with CLI sync for a bulk move, then enable replication to catch anything I missed later, especially if there’s ongoing uploads.

Oh, and on CloudMounter? I was suuuper skeptical until I actually tried dragging an entire Photos folder between buckets—felt like living in the distant future (1998 Windows, lol). Not the tool for million-object datasets, but if you just want muscle memory-level file moves and to avoid CLI anxiety or console lag, it’s worth checking out. Way less stressful than “move-and-pray” via the AWS website.

So, yeah, copying in S3 = not just one path. Test a sample, verify the results, and for the love of all that’s digital, be paranoid and back up twice. Anyone who says “just do this one command, never fails” hasn’t been burned by AccessDenied at 2am.

Sometimes I feel like moving files between S3 buckets is Amazon’s way of testing how much patience you’ve got. Seriously, everyone’s pushing CLI (aws s3 sync), and yeah, it works fine… except the first time you nuke existing files or get stonewalled by that “Access Denied.” The AWS console works in a pinch if you only need a few files and don’t mind your browser choking on 1,000 objects, but you’ll be rechecking tabs and praying for the copy bar to show “Success” for hours.

Honestly, CloudMounter is nowhere near as hyped as it should be. It’s not just for Mac power-users—it treats your S3 buckets like any disk; cut/paste/drag, just like working with folders locally. You skip the upload/download grind and don’t get locked into the AWS UI. Major pros: It’s intuitive and fast for human-scale jobs, especially for organizing or dragging over a folder at a time.

But—it’s not magic. Big dataset? You’ll hit Finder lag, and it’s not built for troubleshooting transfer failures or handling granular copy errors (nothing beats the surgical precision of AWS CLI with all its verbose flags there). So I wouldn’t recommend it for, say, nightly backups of live production data or million-file syncs. More like, “I need to move these 10,000 JPEGs and I want to feel in control, not like I’m setting off a time bomb.” Compared to CLI and AWS’s native tools, or even automation via S3 batch operations, it’s way more approachable. If you want a “Finder window but for S3,” it’s a winner.

Some might say S3 replication solves everything, but setting up versioning (plus all that waiting) isn’t always practical for one-time bulk jobs. Others stick with command-lines for error logs and speed, and both are fair. But for a middle ground where you don’t want to stress, CloudMounter is worth a shot—Keep those permissions tight, always validate after transfer, and never, ever trust just one method without double-checking!